As the world transitions towards cleaner energy solutions, accurate forecasting of renewable energy output becomes essential for grid stability and efficient energy management. This project explores the application of Long Short-Term Memory (LSTM) networks to predict short-term power output for wind and solar farms. Using publicly available meteorological data as well as university data, this research presents a scalable approach to optimizing renewable energy scheduling, minimizing curtailment, and ensuring a more reliable clean energy supply.`

Project Overview

This project was developed as part of my Master’s major research project at Toronto Metropolitan University. Due to university policies, the full research paper is not publicly available, but I can share insights into the methodology, dataset, and key takeaways from the study.

The primary research questions explored were:

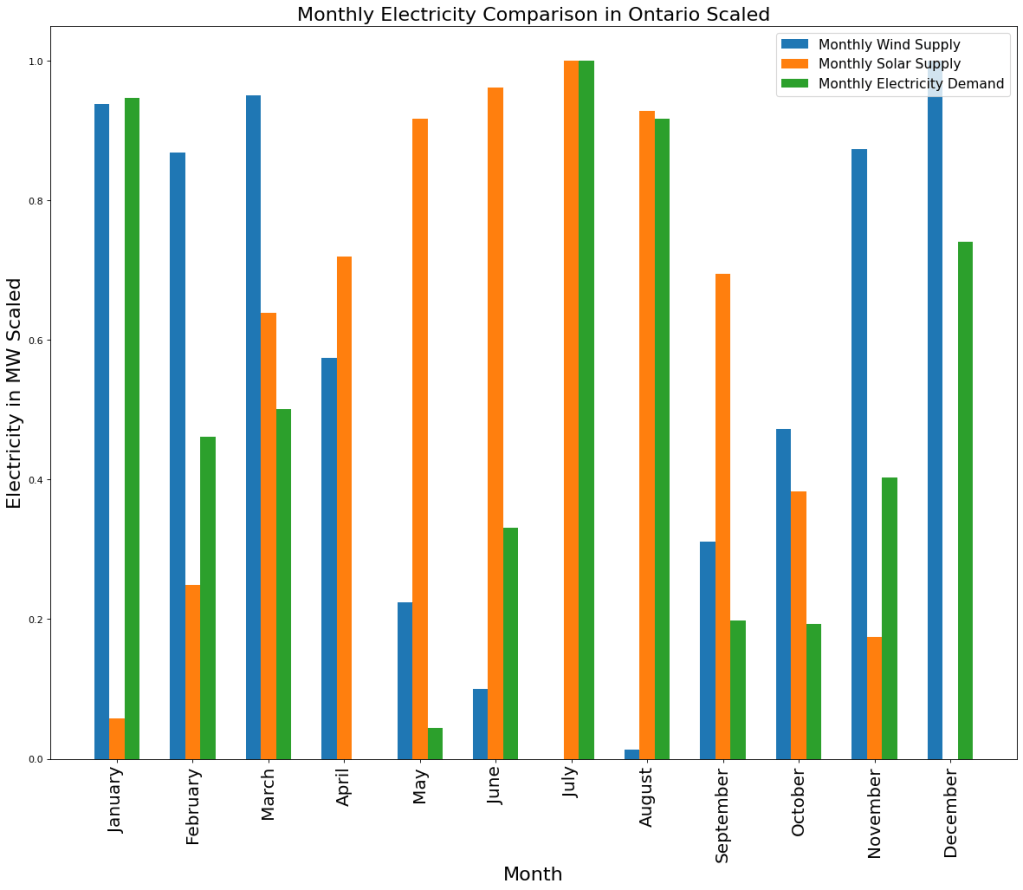

- Can Ontario’s power demands be fully met using renewable energy?

- What are the key meteorological features influencing renewable energy generation?

- Which machine learning algorithms are best suited for predicting short-term renewable energy output?

Data Collection and Feature Engineering

The dataset used for this study was sourced from:

- Independent Electricity System Operator (IESO) – Provided historical power generation data.

- NASA Solar Satellite Dataset – Offered meteorological data on key weather conditions affecting renewable energy output.

The dataset contained 50 meteorological features collected over four years, with key variables including:

✅ Wind Speed – Crucial for wind turbine output.

✅ Solar Radiation – Directly impacts photovoltaic energy generation.

✅ Air Temperature & Dew Point – Affect efficiency of both wind and solar power systems.

✅ Humidity – Can influence atmospheric transparency and wind power generation.

Data Processing & Exploratory Analysis

Before training, exploratory data analysis (EDA) was performed to:

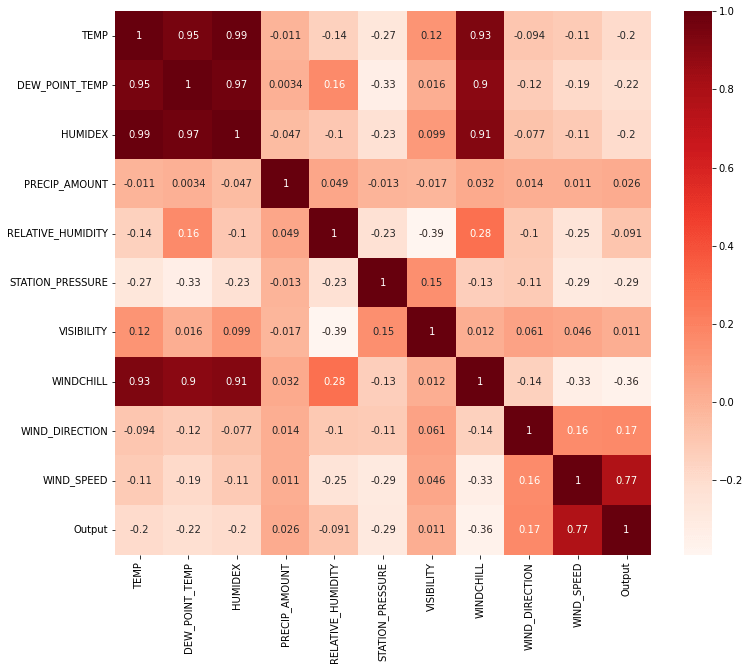

- Identify correlations between meteorological features and renewable energy output.

- Handle missing values using interpolation and mean imputation.

- Remove outliers to ensure data consistency.

A Pearson correlation analysis was used to identify the most relevant features for wind and solar energy forecasting. The dataset was then split into 75% training (3 years) and 25% testing (1 year) for model evaluation.

Algorithm Selection: Why LSTM?

Traditional time series forecasting models like ARIMA (Autoregressive Integrated Moving Average) require pre-processing steps to remove trends and seasonality. However, LSTM networks excel in capturing long-term dependencies in sequential data without such manual adjustments.

LSTM vs. ARIMA: Trade-offs

| Model | Strengths | Weaknesses |

|---|---|---|

| ARIMA | Strong in short-term forecasting, interpretable | Requires stationarity, struggles with non-linear trends |

| LSTM | Handles long-term dependencies, adapts to non-linear relationships | Requires more computational power, hyperparameter tuning complexity |

Given that renewable energy generation is highly dependent on fluctuating weather conditions, LSTM was selected for its ability to learn temporal dependencies effectively.

LSTM Model Architecture & Training

The LSTM model was designed with an input window size to determine how many past timesteps should be considered for prediction. For example, to predict energy output at 2 PM, the model might use weather data from 1 PM, 12 PM, and 11 AM (a window size of 3 hours).

Model Implementation in Python

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from sklearn.preprocessing import MinMaxScaler

import numpy as np

# Scaling the dataset

scaler = MinMaxScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Reshaping for LSTM input (samples, time steps, features)

X_train_reshaped = np.reshape(X_train_scaled, (X_train_scaled.shape[0], window_size, X_train_scaled.shape[1]))

X_test_reshaped = np.reshape(X_test_scaled, (X_test_scaled.shape[0], window_size, X_test_scaled.shape[1]))

# LSTM Model Architecture

model = Sequential([

LSTM(50, activation='relu', return_sequences=True, input_shape=(window_size, X_train_scaled.shape[1])),

LSTM(50, activation='relu'),

Dense(1)

])

# Compile and Train the Model

model.compile(optimizer='adam', loss='mse')

model.fit(X_train_reshaped, y_train, epochs=50, batch_size=32, validation_data=(X_test_reshaped, y_test))

Results & Model Performance

The LSTM model was able to predict 4-hour ahead power output with high confidence. The accuracy varied slightly depending on the season:

- Solar Forecasting: Predictions were more stable during summer due to consistent sunlight exposure.

- Wind Forecasting: Greater variability was observed due to fluctuating wind speeds.

Future Work & Enhancements

Although LSTM models performed well, additional improvements can be explored:

✔ Integrating CNN-LSTM Hybrids – Using Convolutional Neural Networks (CNNs) to extract spatial patterns before feeding them into LSTMs could enhance performance

✔ Real-time Forecasting Pipeline – Deploying the model in a real-time energy forecasting system using cloud platforms like AWS or Azure

Post-Deployment Monitoring

Once deployed, a forecasting model requires continuous monitoring to maintain performance. Key monitoring strategies include:

- Model Drift Detection – If new meteorological patterns emerge, the model may need retraining with updated data.

- Error Metrics Tracking – Using Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) to ensure prediction quality remains high

- Automated Retraining – Implementing pipelines that periodically update the model with new data to prevent degradation over time

Key Takeaways & Impact

🔹 Demonstrated the feasibility of short-term renewable energy forecasting using LSTMs

🔹 Provided insights for optimizing clean energy scheduling and reducing curtailment

This project not only highlights my expertise in deep learning for time series forecasting but also reflects my passion for leveraging AI to solve real-world sustainability challenges.